Hope you already know what Natural Language Processing (NLP) is, what is the use of NLP is and where to apply NLP.

There are lots of tools to work with NLP. Some popular of them are:

- NLTK

- Spacy

- Stanford Core NLP

- Textblob

Above listed tools are most popularly used for Natural Language Processing.

In this topic I will show you how to use Stanford Core NLP in python.

What is Stanford Core NLP?

The Stanford CoreNLP suite released by the NLP research group at Stanford University. It offers Java-based modulesfor the solution of a range of basic NLP tasks like POS tagging (parts of speech tagging), NER (Name Entity Recognition), Dependency Parsing, Sentiment Analysis etc.

Apart from python or java you can test the service on core NLP demo page.

Setup Stanford Core NLP for python

Install pycorenlp

Download Stanford Core NLP Model

Use below link to download:

Install Package

Unzip downloaded file (stanford-corenlp-full-2018-10-05.zip)

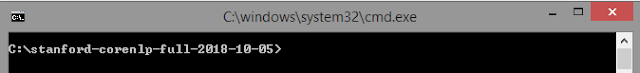

Open command prompt inside unzipped folder.

cd stanford-corenlp-full-2018-10-05

I kept the unzipped file inside C drive.

Start Server

Copy and paste below command in cmd and hit enter.

java -mx5g -cp “*” edu.stanford.nlp.pipeline.StanfordCoreNLPServer -timeout 10000

Keep this local server running while using Stanford NLP in python. Do not close the cmd. That’s it.

Notes:

- Time out is in milliseconds, I set it to 10 sec above. You should increase it if you want to pass huge blobs to the server.

- There are more options, you can list them with –help command.

- -mx5g should allocate enough memory, but you may need to modify the option if your box is under powered.

Now we are all done with set up Stanford Core NLP for python. We can start using Stanford CoreNLP to do different kind of NLP tasks.

POS (Parts of Speech Tagging) using Stanford Corenlp

from pycorenlp import StanfordCoreNLP

nlp = StanfordCoreNLP('http://localhost:9000')

text = "I love you. I hate him. You are nice. He is dumb"

res = nlp.annotate(text,

properties={

'annotators': 'sentiment',

'outputFormat': 'json',

'timeout': 1000,

})

for sen in res['sentences']:

for tok in sen['tokens']:

print('Word:',tok['word'],'==> POS:',tok['pos'])

Output:

Word: I ==> POS: PRP

Word: love ==> POS: VBP

Word: you ==> POS: PRP

Word: . ==> POS: .

Word: I ==> POS: PRP

Word: hate ==> POS: VBP

Word: him ==> POS: PRP

Word: . ==> POS: .

Word: You ==> POS: PRP

Word: are ==> POS: VBP

Word: nice ==> POS: JJ

Word: . ==> POS: .

Word: He ==> POS: PRP

Word: is ==> POS: VBZ

Word: dumb ==> POS: JJ

Name Entity Recognition (NER) using Stanford Corenlp

from pycorenlp import StanfordCoreNLP

nlp = StanfordCoreNLP('http://localhost:9000')

text = "I love you. I hate him. You are nice. He is dumb"

text = "Pele was an excellent football player."

res = nlp.annotate(text,

properties={

'annotators': 'ner',

'outputFormat': 'json',

'timeout': 1000,

})

for sen in res['sentences']:

for tok in sen['entitymentions']:

print('Word:',tok['text'],'==> Entity:',tok['ner'])

Output:

Word: Pele ==> Entity: PERSON

Word: football player ==> Entity: TITLE

Sentiment polarity score using Stanford Corenlp

from pycorenlp import StanfordCoreNLP

nlp = StanfordCoreNLP('http://localhost:9000')

text = "I love you. I hate him. You are nice. He is dumb"

res = nlp.annotate(text,

properties={

'annotators': 'sentiment',

'outputFormat': 'json',

'timeout': 1000,

})

for sen in res["sentences"]:

print("%d: '%s': %s %s" % (

sen["index"],

" ".join([tok["word"] for tok in sen["tokens"]]),

sen["sentimentValue"], sen["sentiment"]))

Output:

0: ‘I love you .’: 3 Positive

1: ‘I hate him .’: 1 Negative

2: ‘You are nice .’: 3 Positive

3: ‘He is dumb’: 1 Negative

How Stanford Corenlp works

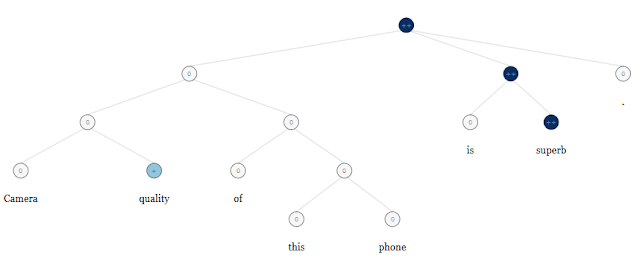

Task like POS tagger, dependency parsing, NER etc is done by using labeled parse trees prepared by the corpus is based on the data set introduced by Pang and Lee (2005) and consists of 11,855 single sentences extracted from movie reviews.

The sentiment analysis implementation is based on the paper Recursive Deep Models for SemanticCompositionality Over a Sentiment Treebank by Richard Socher et al. Here neural network is trained on a sentiment treebank, a novel type of data set the authors conceived of which associates the individual phrases of various sizes which comprise sentences with sentiment labels.

Conclusion:

In this topic I have covered

- Different tools to work with NLP

- What is Stanford Corenlp

- Setup Stanford Corenlp for python

- Parts of speech tagging with Stanford Corenlp

- Entity Recognition using Stanford Corenlp

- Sentiment Analysis using Stanford Corenlp

- How POS tagging, dependency parsing, NER and Sentiment analysis works in Stanford Corenlp

If you have any question or suggestion regarding this topic see you in comment section. I will try my best to answer.