- Why to do keyword extraction

- Keyword extraction using TextRank in Python

- What is happening at background?

Why to do keyword extraction:

- You can judge a comment or sentence within a second just by looking at keyword of a sentence.

- You can make decision whether the comment or sentence is worth reading or not.

- Further you can categorize the sentence to any category. For example whether a certain comment is about mobile or hotel etc.

- You can also use keywords or entity or key phrase as a feature for your supervised model to train.

Setting up PyTextRank in python:

Keyword extraction using PyTextRank in Python:

- Index each word of text.

- Lemmatize each word

- POS tagging.

doc = spacy_nlp(graf_text)

# Pytextrank

import pytextrank

import json

# Sample text

sample_text = 'I Like Flipkart. He likes Amazone. she likes Snapdeal. Flipkart and amazone is on top of google search.'

# Create dictionary to feed into json file

file_dic = {"id" : 0,"text" : sample_text}

file_dic = json.dumps(file_dic)

loaded_file_dic = json.loads(file_dic)

# Create test.json and feed file_dic into it.

with open('test.json', 'w') as outfile:

json.dump(loaded_file_dic, outfile)

path_stage0 = "test.json"

path_stage1 = "o1.json"

# Extract keyword using pytextrank

with open(path_stage1, 'w') as f:

for graf in pytextrank.parse_doc(pytextrank.json_iter(path_stage0)):

f.write("%sn" % pytextrank.pretty_print(graf._asdict()))

print(pytextrank.pretty_print(graf._asdict()))

Output:

{“graf”: [[0, “I”, “i”, “PRP”, 0, 0], [1, “Like”, “like”, “VBP”, 1, 1], [2, “Flipkart”, “flipkart”, “NNP”, 1, 2], [0, “.”, “.”, “.”, 0, 3]], “id”: 0, “sha1”: “c09f142dabeb8465f5c30d1c4ef7a0d842ffb99c”} {“graf”: [[0, “He”, “he”, “PRP”, 0, 4], [1, “likes”, “like”, “VBZ”, 1, 5], [3, “Amazone”, “amazone”, “NNP”, 1, 6], [0, “.”, “.”, “.”, 0, 7]], “id”: 0, “sha1”: “307041869f5cba8a798708d64ee83f290098e00a”} {“graf”: [[0, “she”, “she”, “PRP”, 0, 8], [1, “likes”, “like”, “VBZ”, 1, 9], [4, “Snapdeal”, “snapdeal”, “NNP”, 1, 10], [0, “.”, “.”, “.”, 0, 11]], “id”: 0, “sha1”: “cfd16187a0ddb43d4e412ae83acc9312fc0da922”} {“graf”: [[2, “Flipkart”, “flipkart”, “NN”, 1, 12], [0, “and”, “and”, “CC”, 0, 13], [3, “amazone”, “amazone”, “JJ”, 1, 14], [5, “is”, “be”, “VBZ”, 1, 15], [0, “on”, “on”, “IN”, 0, 16], [6, “top”, “top”, “NN”, 1, 17], [0, “of”, “of”, “IN”, 0, 18], [7, “google”, “google”, “NNP”, 1, 19], [8, “search”, “search”, “NN”, 1, 20], [0, “.”, “.”, “.”, 0, 21]], “id”: 0, “sha1”: “f70b4cb49e04ac5586a4b07898212a7bbb673649”}

path_stage1 = "o1.json"

path_stage2 = "o2.json"

graph, ranks = pytextrank.text_rank(path_stage1)

pytextrank.render_ranks(graph, ranks)

with open(path_stage2, 'w') as f:

for rl in pytextrank.normalize_key_phrases(path_stage1, ranks):

f.write("%sn" % pytextrank.pretty_print(rl._asdict()))

print(pytextrank.pretty_print(rl))

Output:

[“google search”, 0.32500626838725255, [7, 8], “np”, 1] [“search”, 0.16250313419362628, [8], “nn”, 1] [“top”, 0.12701509697944047, [6], “nn”, 1] [“amazone”, 0.12383387876447217, [3], “np”, 1] [“google”, 0.08783938094837528, [7], “nnp”, 1] [“snapdeal”, 0.08690112036341668, [4], “np”, 2] [“flipkart”, 0.08690112036341668, [2], “np”, 3]

How Pytextrank Algorithm Works?

| Word_Id | Raw_Word | Lemma_Word | POS | Keep | Idx |

|---|---|---|---|---|---|

| 0 | “I” | “i” | “PRP” | 0 | 0 |

| 1 | “Like” | “like” | “VBP” | 1 | 1 |

| 2 | “Flipkart” | “flipkart” | “NNP” | 1 | 2 |

| 0 | “.” | “.” | “.” | 0 | 3 |

| 0 | “He” | “he” | “PRP” | 0 | 4 |

| 1 | “likes” | “like” | “VBZ” | 1 | 5 |

| 3 | “Amazone” | “amazone” | “NNP” | 1 | 6 |

| 0 | “.” | “.” | “.” | 0 | 7 |

| 0 | “she” | “she” | “PRP” | 0 | 8 |

| 1 | “likes” | “like” | “VBZ” | 1 | 9 |

| 4 | “Snapdeal” | “snapdeal” | “NNP” | 1 | 10 |

| 0 | “.” | “.” | “.” | 0 | 11 |

| 2 | “Flipkart” | “flipkart” | “NN” | 1 | 12 |

| 0 | “and” | “and” | “CC” | 0 | 13 |

| 3 | “amazone” | “amazone” | “JJ” | 1 | 14 |

| 5 | “is” | “be” | “VBZ” | 1 | 15 |

| 0 | “on” | “on” | “IN” | 0 | 16 |

| 6 | “top” | “top” | “NN” | 1 | 17 |

| 0 | “of” | “of” | “IN” | 0 | 18 |

| 7 | “google” | “google” | “NNP” | 1 | 19 |

| 8 | “search” | “search” | “NN” | 1 | 20 |

| 0 | “.” | “.” | “.” | 0 | 21 |

1. Lower version of each word like “Flipkart” to “flipkart”

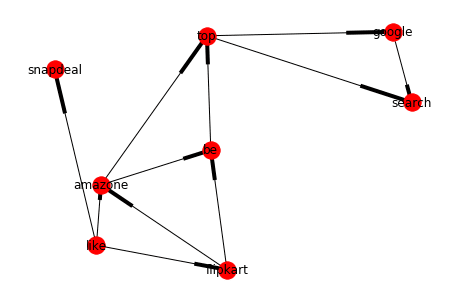

- First it draw weighted graph

- Then it calculates rank of each word based on google page rank algorithm.

import networkx as nx import pylab as plt # Draw network plot nx.draw(graph, with_labels=True) plt.show()

Output:

Words from Google Page Rank Algorithm (Page Rank words)

ranks = nx.pagerank(graph) print(ranks)

Output:

Step2:

| Word_Id | Raw_Word | Lemma_Word | POS | Is_Stop |

|---|---|---|---|---|

| 0 | “I” | “i” | “PRP” | False |

| 1 | “Like” | “like” | “VBP” | True |

| 2 | “Flipkart” | “flipkart” | “NNP” | False |

| 0 | “.” | “.” | “.” | False |

| 0 | “He” | “he” | “PRP” | False |

| 1 | “likes” | “like” | “VBZ” | True |

| 3 | “Amazone” | “amazone” | “NNP” | False |

| 0 | “.” | “.” | “.” | False |

| 0 | “she” | “she” | “PRP” | True |

| 1 | “likes” | “like” | “VBZ” | True |

| 4 | “Snapdeal” | “snapdeal” | “NNP” | False |

| 0 | “.” | “.” | “.” | False |

| 2 | “Flipkart” | “flipkart” | “NN” | False |

| 0 | “and” | “and” | “CC” | True |

| 3 | “amazone” | “amazone” | “JJ” | False |

| 5 | “is” | “be” | “VBZ” | True |

| 0 | “on” | “on” | “IN” | True |

| 6 | “top” | “top” | “NN” | True |

| 0 | “of” | “of” | “IN” | True |

| 7 | “google” | “google” | “NNP” | False |

| 8 | “search” | “search” | “NN” | False |

| 0 | “.” | “.” | “.” | False |

2. Collect Entities:

| Word_Id | Raw_Word | Lemma_Word | POS | Is_Stop |

|---|---|---|---|---|

| 2 | “Flipkart” | “flipkart” | “NNP” | False |

| 3 | “Amazone” | “amazone” | “NNP” | False |

| 4 | “Snapdeal” | “snapdeal” | “NNP” | False |

| 2 | “Flipkart” | “flipkart” | “NN” | False |

| 7 | “google” | “google” | “NNP” | False |

| 8 | “search” | “search” | “NN” | False |

| Word_Id | Raw_Word | Lemma_Word | POS | Is_Stop |

|---|---|---|---|---|

| 0 | “I” | “i” | “PRP” | False |

| 1 | “Like” | “like” | “VBP” | True |

| 2 | “Flipkart” | “flipkart” | “NNP” | False |

| 0 | “.” | “.” | “.” | False |

| 0 | “He” | “he” | “PRP” | False |

| 1 | “likes” | “like” | “VBZ” | True |

| 3 | “Amazone” | “amazone” | “NNP” | False |

| 0 | “.” | “.” | “.” | False |

| 0 | “she” | “she” | “PRP” | True |

| 1 | “likes” | “like” | “VBZ” | True |

| 4 | “Snapdeal” | “snapdeal” | “NNP” | False |

| 0 | “.” | “.” | “.” | False |

| 2 | “Flipkart” | “flipkart” | “NN” | False |

| 0 | “and” | “and” | “CC” | True |

| 3 | “amazone” | “amazone” | “JJ” | False |

| 5 | “is” | “be” | “VBZ” | True |

| 0 | “on” | “on” | “IN” | True |

| 6 | “top” | “top” | “NN” | True |

| 0 | “of” | “of” | “IN” | True |

| 7 | “google” | “google” | “NNP” | False |

| 8 | “search” | “search” | “NN” | False |

| 0 | “.” | “.” | “.” | False |

import pytextrank

import sys

import json

# Stage 1:

sample_text = 'I Like Flipkart. He likes Amazone. she likes Snapdeal. Flipkart and amazone is on top of google search.'

# Create dictionary to feed into json file

file_dic = {"id" : 0,"text" : sample_text}

file_dic = json.dumps(file_dic)

loaded_file_dic = json.loads(file_dic)

# Create test.json and feed file_dic into it.

with open('test.json', 'w') as outfile:

json.dump(loaded_file_dic, outfile)

path_stage0 = "test.json"

path_stage1 = "o1.json"

with open(path_stage1, 'w') as f:

for graf in pytextrank.parse_doc(pytextrank.json_iter(path_stage0)):

f.write("%sn" % pytextrank.pretty_print(graf._asdict()))

print(pytextrank.pretty_print(graf._asdict()))

# Stage 2 extract keywords

path_stage1 = "o1.json"

path_stage2 = "o2.json"

graph, ranks = pytextrank.text_rank(path_stage1)

pytextrank.render_ranks(graph, ranks)

with open(path_stage2, 'w') as f:

for rl in pytextrank.normalize_key_phrases(path_stage1, ranks):

f.write("%sn" % pytextrank.pretty_print(rl._asdict()))

print(pytextrank.pretty_print(rl))

## Google Page Rank

import networkx as nx

import pylab as plt

nx.draw(graph, with_labels=True)

plt.show()

- What is keyword?

- Why to do keyword extraction?

- Setting up PyTextRank for python

- Keyword extraction using PyTextRank in python

- What is happening at background of PyTextRank?

Good read. Thanks for sharing this article