In my last post I have explained how to prepare custom training data for Named Entity Recognition (NER) by using annotation tool called WebAnno.

But the output from WebAnnois not same with Spacy training data format to train custom Named Entity Recognition (NER) using Spacy.

In this post I will show you how to create final Spacy formatted training data to train custom NER using Spacy. And also show you how train custom NER by using this training data.

Prerequisites

While writing codes for this tutorial I have used

- Python version: 3.6.3

- Spacy version: 2.1.6

- en-core-web-sm (spacy small model) version: 2.1.0

Must Read:

Prepare Spacy formatted custom training data for NER Model

Before start writing code in python let’s have a look at Spacy training data format for Named Entity Recognition (NER)

That means for each sentence we need to mention Entity Name with Entity Position along with the sentence itself.

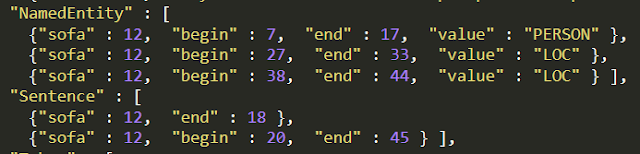

Now if you observe output json file from WebAnno (from last tutorial) carefully, you will find some key like NamedEntity, Sentenceand _referenced_fss.

· NamedEntity key: Entity name and entity position (start and end) is listed for whole document (later we need to convert it for each sentence in python code)

· Sentence key: Starting and ending position of each sentence is listed

· _referenced_fss: key: All actual provided sentence is listed

Now let’s start coding to create final Spacy formatted custom training data to train custom Named Entity Recognition (NER) model using Spacy and python.

###### Prepare Spacy formatted training data for custom NER #######

import json

# Read output json file from WebAnno (Annotation tool)

with open('input_json.json') as data_file:

data = json.load(data_file)

# Extract original sentences

sentences_list = data['_referenced_fss']['12']['sofaString'].split('rn')

# Extract entity start/ end positions and names

ent_loc = data['_views']['_InitialView']['NamedEntity']

# Extract Sentence start/ end positions

Sentence = data['_views']['_InitialView']['Sentence']

# Set first sentence starting position 0

Sentence[0]['begin'] = 0

# Prepare spacy formatted training data

TRAIN_DATA = []

ent_list = []

for sl in range(len(Sentence)):

ent_list_sen = []

for el in range(len(ent_loc)):

if(ent_loc[el]['begin'] >= Sentence[sl]['begin'] and ent_loc[el]['end'] <= Sentence[sl]['end']):

## Need to subtract entity location with sentence begining as webanno generate data by treating document as a whole

ent_list_sen.append([(ent_loc[el]['begin']-Sentence[sl]['begin']),(ent_loc[el]['end']-Sentence[sl]['begin']),ent_loc[el]['value']])

ent_list.append(ent_list_sen)

## Create blank dictionary

ent_dic = {}

## Fill value to the dictionary

ent_dic['entities'] = ent_list[-1]

## Prepare final training data

TRAIN_DATA.append([sentences_list[sl],ent_dic])

TRAIN_DATA

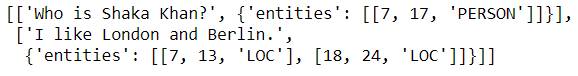

Output:

[[‘Who is Shaka Khan?’, {‘entities’: [[7, 17, ‘PERSON’]]}],

[‘I like London and Berlin.’,

{‘entities’: [[7, 13, ‘LOC’], [18, 24, ‘LOC’]]}]]

As we have done with Spacy formatted custom training data for custom NER model, now I will show you how to train custom Named Entity Recognition (NER) in python using Spacy.

One important point: there are two ways to train custom NER

1. Train new NER model

2. Update existing Spacy model

Note: Codes to train NER is edited from spacy github repository. You can always look into that.

Must Read:

1. Train new NER model using Spacy

Now let’s try to train a new fresh NER model by using prepared custom NER data

import spacy

import random

from spacy.util import minibatch, compounding

from pathlib import Path

# Define output folder to save new model

model_dir = 'D:/Anindya/E/model'

# Train new NER model

def train_new_NER(model=None, output_dir=model_dir, n_iter=100):

"""Load the model, set up the pipeline and train the entity recognizer."""

if model is not None:

nlp = spacy.load(model) # load existing spaCy model

print("Loaded model '%s'" % model)

else:

nlp = spacy.blank("en") # create blank Language class

print("Created blank 'en' model")

# create the built-in pipeline components and add them to the pipeline

# nlp.create_pipe works for built-ins that are registered with spaCy

if "ner" not in nlp.pipe_names:

ner = nlp.create_pipe("ner")

nlp.add_pipe(ner, last=True)

# otherwise, get it so we can add labels

else:

ner = nlp.get_pipe("ner")

# add labels

for _, annotations in TRAIN_DATA:

for ent in annotations.get("entities"):

ner.add_label(ent[2])

# get names of other pipes to disable them during training

other_pipes = [pipe for pipe in nlp.pipe_names if pipe != "ner"]

with nlp.disable_pipes(*other_pipes): # only train NER

# reset and initialize the weights randomly – but only if we're

# training a new model

if model is None:

nlp.begin_training()

for itn in range(n_iter):

random.shuffle(TRAIN_DATA)

losses = {}

# batch up the examples using spaCy's minibatch

batches = minibatch(TRAIN_DATA, size=compounding(4.0, 32.0, 1.001))

for batch in batches:

texts, annotations = zip(*batch)

nlp.update(

texts, # batch of texts

annotations, # batch of annotations

drop=0.5, # dropout - make it harder to memorise data

losses=losses,

)

print("Losses", losses)

# test the trained model

for text, _ in TRAIN_DATA:

doc = nlp(text)

print("Entities", [(ent.text, ent.label_) for ent in doc.ents])

print("Tokens", [(t.text, t.ent_type_, t.ent_iob) for t in doc])

# save model to output directory

if output_dir is not None:

output_dir = Path(output_dir)

if not output_dir.exists():

output_dir.mkdir()

nlp.to_disk(output_dir)

print("Saved model to", output_dir)

# test the saved model

print("Loading from", output_dir)

nlp2 = spacy.load(output_dir)

for text, _ in TRAIN_DATA:

doc = nlp2(text)

print("Entities", [(ent.text, ent.label_) for ent in doc.ents])

print("Tokens", [(t.text, t.ent_type_, t.ent_iob) for t in doc])

# Finally train the model by calling above function

train_new_NER()

After running above code you should find that some files are created in the specified folder.

Test new trained NER model in Spacy

Now it’s time to test our fresh trained NER model to see whether it is working properly or not.

# Use new trained saved model

print("Loading trained model from:", model_dir)

nlp2 = spacy.load(model_dir)

doc2 = nlp2('Who is Shaka Khan?')

for token in doc2:

print(token, token.ent_type_)

Output:

Loading trained model from: D:/Anindya/E/model

Who

is

Shaka PERSON

Khan PERSON

?

2. Update existing Spacy NER model

In above code we have seen how to train new custom NER model in Spacy. Now if we want to add learning of newly prepared custom NER data to Spacy pre-trained NER model. We can do that by updating Spacy pretrained NER model. Let’s do that.

updated_model_dir = 'D:/Anindya/E/updated_model'

## Update existing spacy model and store into a folder

def update_model(model='en_core_web_sm', output_dir=updated_model_dir, n_iter=100):

"""Load the model, set up the pipeline and train the entity recognizer."""

if model is not None:

nlp = spacy.load(model) # load existing spaCy model

print("Loaded model '%s'" % model)

else:

nlp = spacy.blank("en") # create blank Language class

print("Created blank 'en' model")

# create the built-in pipeline components and add them to the pipeline

# nlp.create_pipe works for built-ins that are registered with spaCy

if "ner" not in nlp.pipe_names:

ner = nlp.create_pipe("ner")

nlp.add_pipe(ner, last=True)

# otherwise, get it so we can add labels

else:

ner = nlp.get_pipe("ner")

# add labels

for _, annotations in TRAIN_DATA:

for ent in annotations.get("entities"):

ner.add_label(ent[2])

# get names of other pipes to disable them during training

other_pipes = [pipe for pipe in nlp.pipe_names if pipe != "ner"]

with nlp.disable_pipes(*other_pipes): # only train NER

# reset and initialize the weights randomly – but only if we're

# training a new model

if model is None:

nlp.begin_training()

for itn in range(n_iter):

random.shuffle(TRAIN_DATA)

losses = {}

# batch up the examples using spaCy's minibatch

batches = minibatch(TRAIN_DATA, size=compounding(4.0, 32.0, 1.001))

for batch in batches:

texts, annotations = zip(*batch)

nlp.update(

texts, # batch of texts

annotations, # batch of annotations

drop=0.5, # dropout - make it harder to memorise data

losses=losses,

)

print("Losses", losses)

# test the trained model

for text, _ in TRAIN_DATA:

doc = nlp(text)

print("Entities", [(ent.text, ent.label_) for ent in doc.ents])

print("Tokens", [(t.text, t.ent_type_, t.ent_iob) for t in doc])

# save model to output directory

if output_dir is not None:

output_dir = Path(output_dir)

if not output_dir.exists():

output_dir.mkdir()

nlp.to_disk(output_dir)

print("Saved model to", output_dir)

# Finally train the model by calling above function

update_model()

After running above code you should find that some files are created in the specified folder.

Test updated NER model in Spacy

Now it’s time to test our updated NER model to see whether it is working properly or not.

# Use updated saved model

print("Loading updated model from:", updated_model_dir)

nlp2 = spacy.load(model_dir)

doc2 = nlp2('Who is Shaka Khan?')

for token in doc2:

print(token, token.ent_type_)

Output:

Loading updated model from: D:/Anindya/E/updated_model

Who

is

Shaka PERSON

Khan PERSON

?

In this tutorial I have walk you through:

- How to create Spacy formatted training data for custom NER

- Train Custom NER model using Spacy in python

- Update existing Spacy NER model

Note: I have used same text/ data to train as mentioned in the Spacy document so that you can easily relate this tutorial with Spacy document.

If you have any question or suggestion regarding this topic see you in comment section. I will try my best to answer.

Sir, one error. When I am running Json file. I.e parsing I am getting error saying index not match. I.e when i try to print TRAIN DATA

Rebuild train data created by webanno (explained in my previous post) and check again.

I just had look on this blog, your error is due to list index issue.

You replace the code line with this TRAIN_DATA.append([sentences_list[sl-1],ent_dic])

and you good to go.

Happy Coding

Pramod

More precisely I say check the split function as its not workinfg with split(‘rn) as expected

Hi, I tried the solution that Pramod had mentioned, but I am still getting this error: File “convrt.py”, line 43, in

TRAIN_DATA.append([sentences_list[sl-1],ent_dic])

IndexError: list index out of range

Should the line be outside the for loop?

Hi Serlin,

Please ensure that you are using the same version of spacy to train custom NER, explained in this post.

Wspaniały blog wiele przydatnych informacji jakie są twoje inspirację z czego czerpiesz tak wwyrafinowaną “moc twórczą” ?